-

Looking for HSC notes and resources? Check out our Notes & Resources page

Ma...tri...ces.. (1 Viewer)

- Thread starter leehuan

- Start date

Expand using the distributive law and the given fact that AB = BA.This is preposterous... What's the easiest way to do this question?

Writing out the components takes way too long

So:

(A – B)(A + B) = A(A + B) – B(A+B) (by distributivity of matrix multiplication)

= A2 + AB – BA – B2 (by distributivity of matrix multiplication and since X*X = X2 for any square matrix X, by definition)

= A2 + O – B2, since we are given that AB = BA, which implies that AB – BA = O (zero matrix)

= A2 – B2.

Last edited:

KingOfActing

lukewarm mess

This is preposterous... What's the easiest way to do this question?

Writing out the components takes way too long

Let A be m x n. Since AB is defined, B is n x p for some p.Oh wow this is what happens when I forget that matrices satisfy other laws too...

Ok, another question. Using just a paragraph I can explain it. But if I wanted my algebra to supplement it how would I do this.

Since BA is defined, n x p is compatible with m x n, which implies p = m.

Now, AB is m x p (since A is m x n and B is n x p), i.e. m x m.

Similarly, BA is n x n.

(m,n,p positive integers)

leehuan

Well-Known Member

- Joined

- May 31, 2014

- Messages

- 5,768

- Gender

- Male

- HSC

- 2015

Alright fair enough that's what I didLet A be m x n. Since AB is defined, B is n x p for some p.

Since BA is defined, n x p is compatible with m x n, which implies p = m.

Now, AB is m x p (since A is m x n and B is n x p), i.e. m x m.

Similarly, BA is n x n.

(m,n,p positive integers)

Yeah pretty much.The question: Prove the commutative law of matrix addition for matrices A and B of same size.

Can't I just break up the matrix (A+B) into (A) + (B) and then use the commutative law for R before reverting?

In = I can be proved by induction using that fact, yes.Since AI = A, can we just assume that I^n = I? (n in Z)

leehuan

Well-Known Member

- Joined

- May 31, 2014

- Messages

- 5,768

- Gender

- Male

- HSC

- 2015

Let A and be square matrices such that A^2=I, B^2=1 and (AB)^2 = I. Prove that AB=BA

So keeping in mind that I^2 = I I let A^2 B^2 = I.

This means that AABB=I

The second statement also implies ABAB=I

So equatin, AABB=ABAB which is not trivial as matrix multiplication is not commutative.

Can I therefore argue that AB=BA because the sequence of multiplication matters? I feel that this is flawed because you can't cancel out matrices: AB=AC does not imply B=C

Basically, I think I gave a wrong proof

So keeping in mind that I^2 = I I let A^2 B^2 = I.

This means that AABB=I

The second statement also implies ABAB=I

So equatin, AABB=ABAB which is not trivial as matrix multiplication is not commutative.

Can I therefore argue that AB=BA because the sequence of multiplication matters? I feel that this is flawed because you can't cancel out matrices: AB=AC does not imply B=C

Basically, I think I gave a wrong proof

Paradoxica

-insert title here-

In the order given, you have AABB = ABABLet A and be square matrices such that A^2=I, B^2=1 and (AB)^2 = I. Prove that AB=BA

So keeping in mind that I^2 = I I let A^2 B^2 = I.

This means that AABB=I

The second statement also implies ABAB=I

So equatin, AABB=ABAB which is not trivial as matrix multiplication is not commutative.

Can I therefore argue that AB=BA because the sequence of multiplication matters? I feel that this is flawed because you can't cancel out matrices: AB=AC does not imply B=C

Basically, I think I gave a wrong proof

The first and last matrices are identical, and appear in the same position, so they can be removed, leaving behind the central matrices.

The equivalence follows.

NoteLet A and be square matrices such that A^2=I, B^2=1 and (AB)^2 = I. Prove that AB=BA

So keeping in mind that I^2 = I I let A^2 B^2 = I.

This means that AABB=I

The second statement also implies ABAB=I

So equatin, AABB=ABAB which is not trivial as matrix multiplication is not commutative.

Can I therefore argue that AB=BA because the sequence of multiplication matters? I feel that this is flawed because you can't cancel out matrices: AB=AC does not imply B=C

Basically, I think I gave a wrong proof

AB = A I B

= A (A2 AB AB B2) B (using the given equalities involving I, noting that AB AB = I, as (AB)2 = AB AB)

= A A A A (B A) B B B B

= I I BA I I (as A2 = I = B2)

= BA.

(Of course we have used associativity of matrix multiplication a lot here.)

Last edited:

This essentially assumes that A and B are invertible (which is indeed true as can be proved by consideration of determinants, or definition of inverses etc., and if we are allowed to use knowledge of matrix inverses, we can do the proof like that or other ways using inverses).In the order given, you have AABB = ABAB

The first and last matrices are identical, and appear in the same position, so they can be removed, leaving behind the central matrices.

The equivalence follows.

Last edited:

leehuan

Well-Known Member

- Joined

- May 31, 2014

- Messages

- 5,768

- Gender

- Male

- HSC

- 2015

Ok I'm inclined to say that's magic, even though it's really reuse of associative law...Note

AB = A I B

= A (A2 AB AB B2) B (using the given equalities involving I)

= A A A A (B A) B B B B

= I I BA I I (as A2 = I = B2)

= BA.

(Of course we have used associativity of matrix multiplication a lot here.)

_______________________________

Let A be a 2x2 real matrix such that AX=XA for all 2x2 real matrices X. Show that A is a scalar product of the identity matrix.

Hint please?

_______________________________

Confession: Wow, I felt dumb about maths since uni but THIS has taken me aback on new levels

Ok I'm inclined to say that's magic, even though it's really reuse of associative law...

_______________________________

Let A be a 2x2 real matrix such that AX=XA for all 2x2 real matrices X. Show that A is a scalar product of the identity matrix.

Hint please?

_______________________________

Confession: Wow, I felt dumb about maths since uni but THIS has taken me aback on new levels

Put some in a spoiler because realised you only wanted hint.

seanieg89

Well-Known Member

- Joined

- Aug 8, 2006

- Messages

- 2,653

- Gender

- Male

- HSC

- 2007

Yep, since it's 2x2 they clearly want you to just equate entries and make the deductions from there.

The same result is true in higher dimensions too, and can be proved as follows:

Every n x n matrix over R has a complex eigenvalue. (From its characteristic polynomial and the fundamental theorem of algebra.)

So suppose Av=sv for some complex number s and some nonzero v in C^n.

Then AXv=XAv=sXv for all matrices X in Mat(C,n). (That AX=XA for all COMPLEX X follows from splitting X into its real and imaginary parts).

I.e. Xv is an s-eigenvector for all complex matrices X. But by choosing X appropriately, we can map v to any w in C^n that we like. (Much more than this is true, we can find a complex invertible matrix that maps an arbitrary basis of C^n to any other basis.)

So Aw=sw for all w in C^n and (A-sI)w=0 for all w in C^n.

As only the zero matrix is identically zero as a linear map (check!), we conclude that A=sI. (At this point it is also clear that s must in fact be real.)

Edit: You don't need the existence of an eigenvector to prove this property for matrices over more general fields, you can also just take X to successively be the matrix whose only nonzero entry is "1" in the position (i,j). The collection of resulting equations then also tells you that that the matrix must be a a constant multiple of the identity. I just did it the way above because it is quite a natural proof even if you are talking about linear operators rather than matrices (it is essentially coordinate-free in nature).

The same result is true in higher dimensions too, and can be proved as follows:

Every n x n matrix over R has a complex eigenvalue. (From its characteristic polynomial and the fundamental theorem of algebra.)

So suppose Av=sv for some complex number s and some nonzero v in C^n.

Then AXv=XAv=sXv for all matrices X in Mat(C,n). (That AX=XA for all COMPLEX X follows from splitting X into its real and imaginary parts).

I.e. Xv is an s-eigenvector for all complex matrices X. But by choosing X appropriately, we can map v to any w in C^n that we like. (Much more than this is true, we can find a complex invertible matrix that maps an arbitrary basis of C^n to any other basis.)

So Aw=sw for all w in C^n and (A-sI)w=0 for all w in C^n.

As only the zero matrix is identically zero as a linear map (check!), we conclude that A=sI. (At this point it is also clear that s must in fact be real.)

Edit: You don't need the existence of an eigenvector to prove this property for matrices over more general fields, you can also just take X to successively be the matrix whose only nonzero entry is "1" in the position (i,j). The collection of resulting equations then also tells you that that the matrix must be a a constant multiple of the identity. I just did it the way above because it is quite a natural proof even if you are talking about linear operators rather than matrices (it is essentially coordinate-free in nature).

Last edited:

Paradoxica

-insert title here-

Spoilers don't even work properly on this forum.Put some in a spoiler because realised you only wanted hint.

This forum should go take the standards of other forums, like archive.ninjakiwi.com, where the spoiler actually minimises away the subject entry

leehuan

Well-Known Member

- Joined

- May 31, 2014

- Messages

- 5,768

- Gender

- Male

- HSC

- 2015

One more question here and then if I have more I'm making a new thread.

=(AB)C)

Either hint or full answer please, thanks. Editing in what I have so far.

_______________________________

Suppose A is an mxn matrix and B is an nxp matrix. Then AB is an mxp matrix.

Suppose C is a pxq matrix. Then BC is an nxq matrix.

Redundancies

If A(BC) exists, then col.(A) = row.(BC) => n = n

If (AB)C exists, then col.(AB) = row.(C) => p = p

\right] }_{ ij }=\sum _{ k=1 }^{ n }{ { \left[ A \right] }_{ ik }{ \left[ BC \right] }_{ kj } } =\sum _{ k=1 }^{ n }{ { \left[ A \right] }_{ ik }\left( \sum _{ l=1 }^{ p }{ { \left[ B \right] }_{ kl }{ \left[C\right] }_{ lj } } \right) } )

= leehuan thinks he is wrong again

Either hint or full answer please, thanks. Editing in what I have so far.

_______________________________

Suppose A is an mxn matrix and B is an nxp matrix. Then AB is an mxp matrix.

Suppose C is a pxq matrix. Then BC is an nxq matrix.

Redundancies

If A(BC) exists, then col.(A) = row.(BC) => n = n

If (AB)C exists, then col.(AB) = row.(C) => p = p

= leehuan thinks he is wrong again

Last edited:

dan964

what

(you aren't wrong so far)One more question here and then if I have more I'm making a new thread.

Either hint or full answer please, thanks. Editing in what I have so far.

_______________________________

Suppose A is an mxn matrix and B is an nxp matrix. Then AB is an mxp matrix.

Suppose C is a pxq matrix. Then BC is an nxq matrix.

Redundancies

If A(BC) exists, then col.(A) = row.(BC) => n = n

If (AB)C exists, then col.(AB) = row.(C) => p = p

= leehuan thinks he is wrong again

As someone who is doing probably the exact/equivalent course but at UOW, I will say that if you let D=BC and E=AC (i.e. you need to compute the stuff in the brackets first as a matrix multiplication). You seem to be on the right track, expand the sums noting that a_ij or whatever is a scalar and so things like associativity work with it.

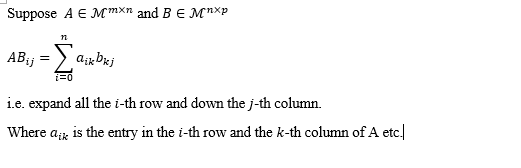

I would also use the notation (my tex skills are terrible) just a sec I'll put a screenshot.

etc. etc. (I might be missing some brackets oh well)

Last edited: